Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information:

This guide will get you a fully working stack that is:

- Easy to access

- Upgradeable

- Secure

- Simple to debug

Prerequisites

- Synpse account (free)

- Machine with a GPU, running Linux

- Internet connection to download the artifacts (model weights are big). The model itself will run without sending anything to the cloud

Setting things up

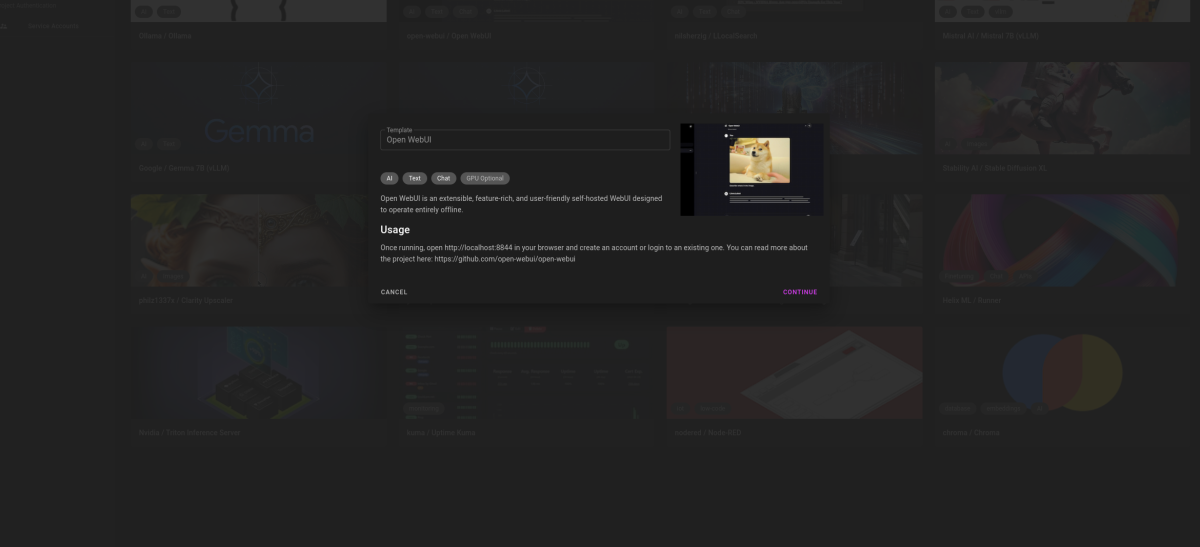

Go to the https://cloud.synpse.net/templates?id=open-webui and follow the instructions:

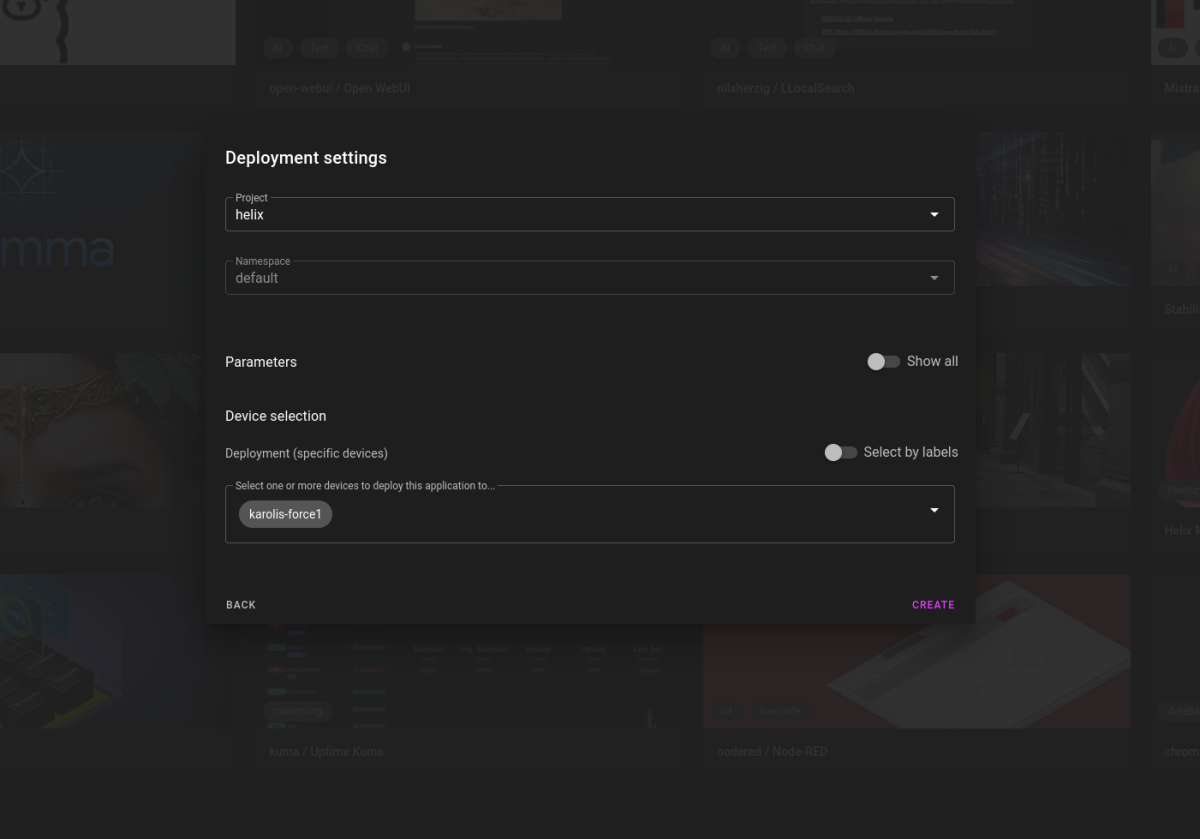

Select your default project and device. Then click create:

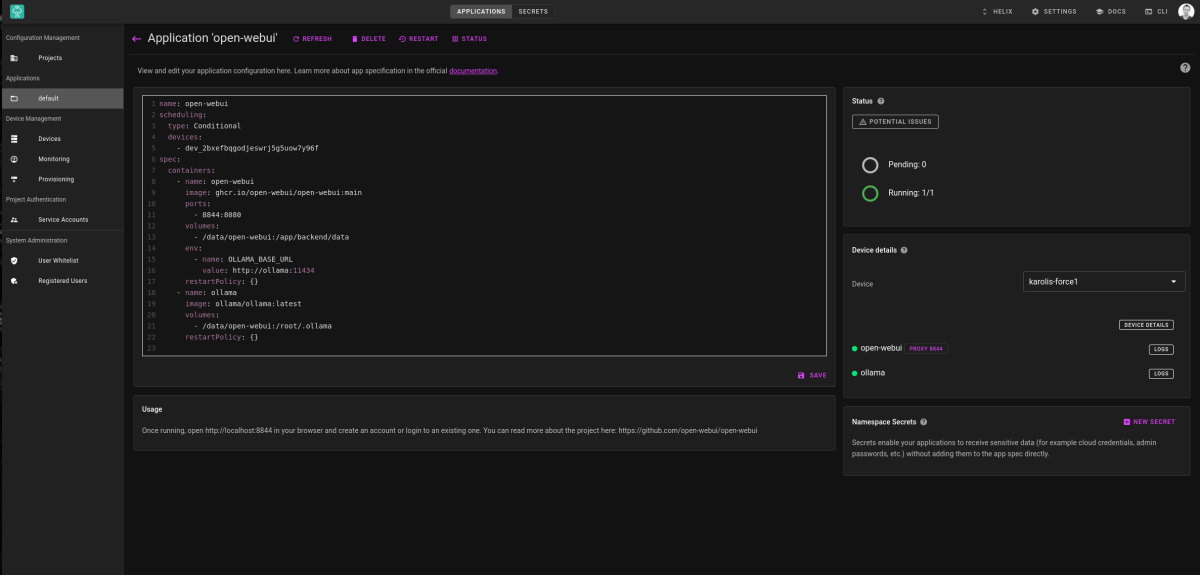

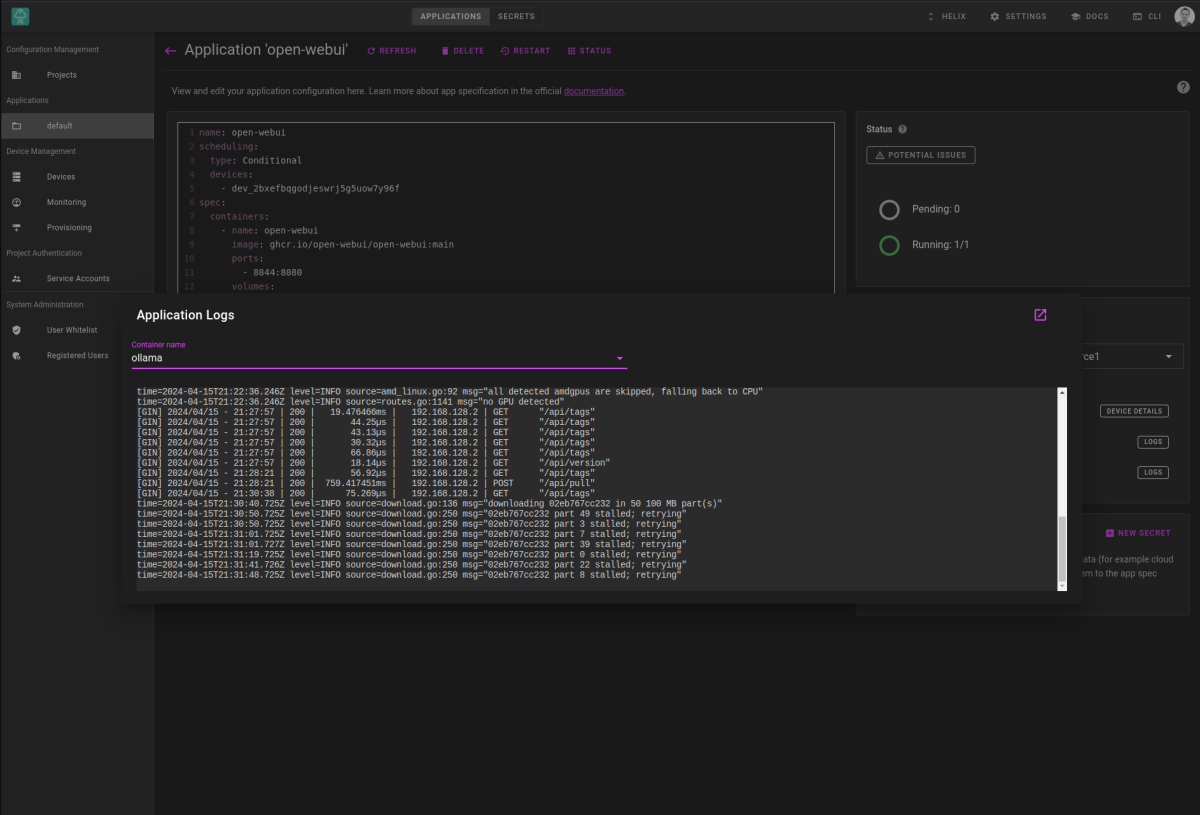

You should be redirected to the application page where you can see the template which consists of two containers:

- open-webui (the UI and backend)

- ollama (our LLM server)

The application deployment looks like the image below. From here you can view logs, container statuses.

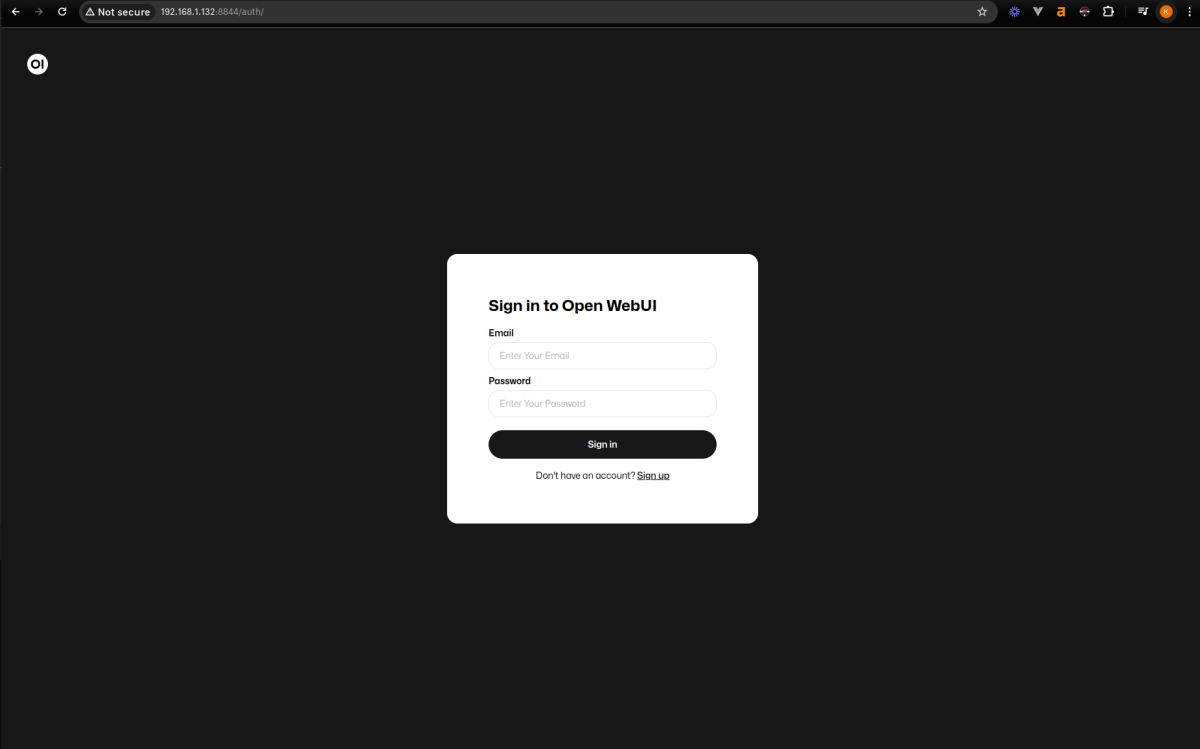

Accessing the UI

There are several ways to access your. One way is to access directly using device IP. For example if my device IP is 192.168.1.132 then I can access it by typing http://192.168.1.132:8844 in my browser:

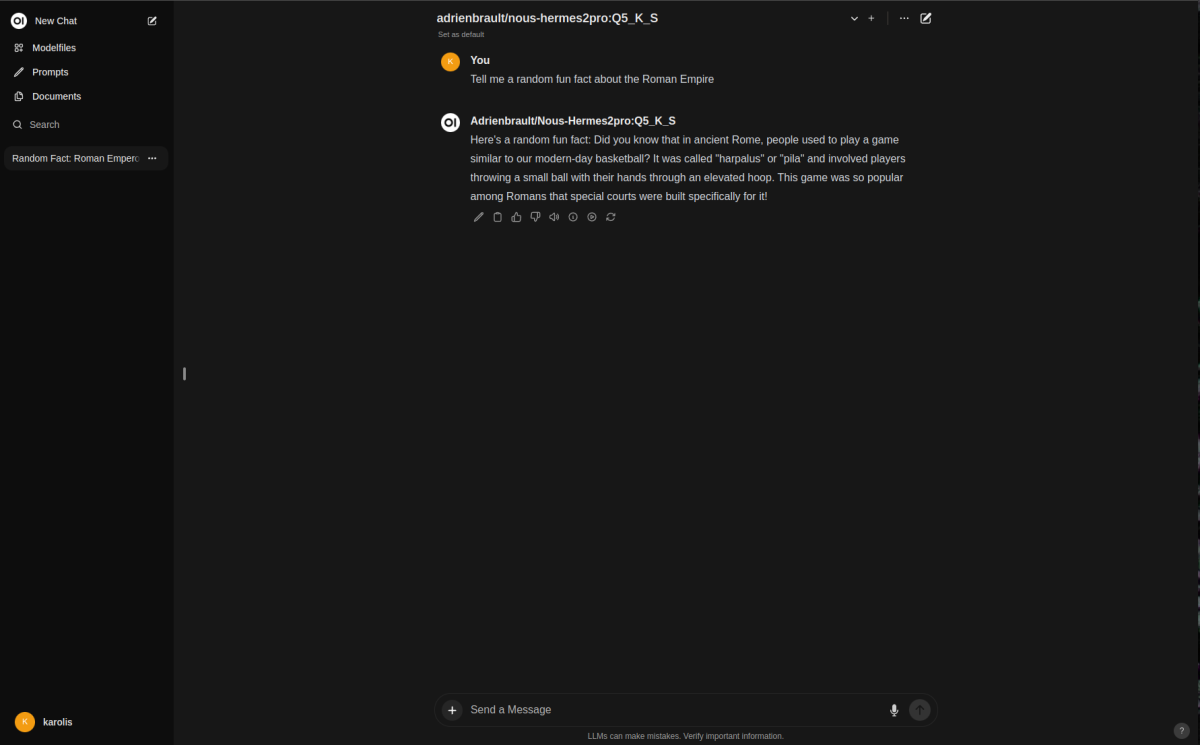

Once opened, you will need to download a model. I will go with adrienbrault/nous-hermes2pro:Q5_K_S as I found it really good when working on LLM tools and function calling.

You can also access the UI by using a proxy command that appears in the application deployment view (placeholders will be targeting your project and device):

|

|

If you have this command running, you will access the UI by typing http://localhost:8844 in your browser. This can be great when you are working on a remote machine and want to access the UI from your local machine.

Checking under the hood

While downloading the model, we can take a peek how things are working. While in the application deployment view, click on logs for ollama or ollama-webui and then you can check logs for any of these processes:

Conclusion

Once the download is finished, data will be persisted in /data/open-webui directory. You can start chatting with your model:

We have deployed an Open WebUI on our local server. This is a great way to have a self-hosted AI tool that can be used offline.

Check out our other templates from the gallery or just deploy your own dockerized application. If you have any questions or suggestions, feel free to reach out.

Turning off deployment

If you don’t want to leave it running, you can update

|

|

to:

|

|

This will tell synpse to remove the containers. Data will be kept so when you start it again, it will be as you left it.